Intercept and THINC scheme¶

Overview¶

In the previous section, the surface normal is computed at each cell center. This information is, however, not enough to uniquely define the surface position uniquely:

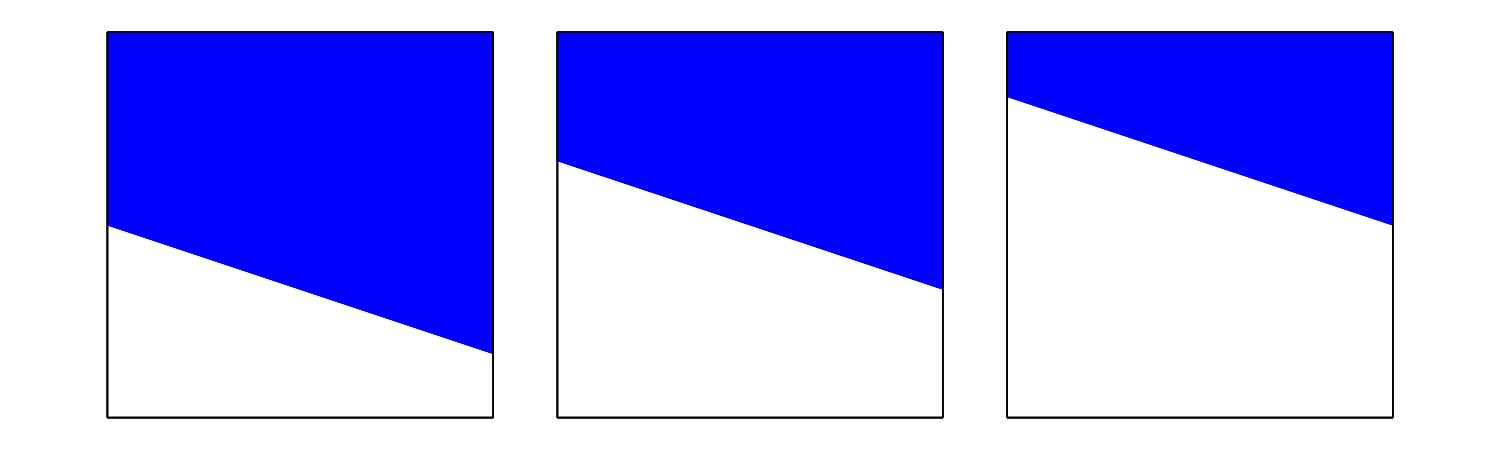

Three different interfacial positions having the same surface normal.¶

In this image, for example, although all three surface patterns have the same surface normal, they are obviously different.

Since the three cases have the different occupations, I use the information to fix the surface position:

which is the definition of \(\phi\).

Note

In the previous section, I defined local coordinate systems such that the size of the computational cells \(V_{ij}\) is \(1\). The origin of the local coordinates locates at the cell center, and as a result the cell edges exist at \(x = \pm \frac{1}{2}\) and \(y = \pm \frac{1}{2}\).

Mathematically or physically speaking, since the free surface is discontinuous, \(H\) is a step function. Conventional VOF methods solve the equation based on this assumption.

In this project, I treat this problem in a different way here: instead of \(H\) (step function), I consider a slightly smoothed function \(\hat{H}\):

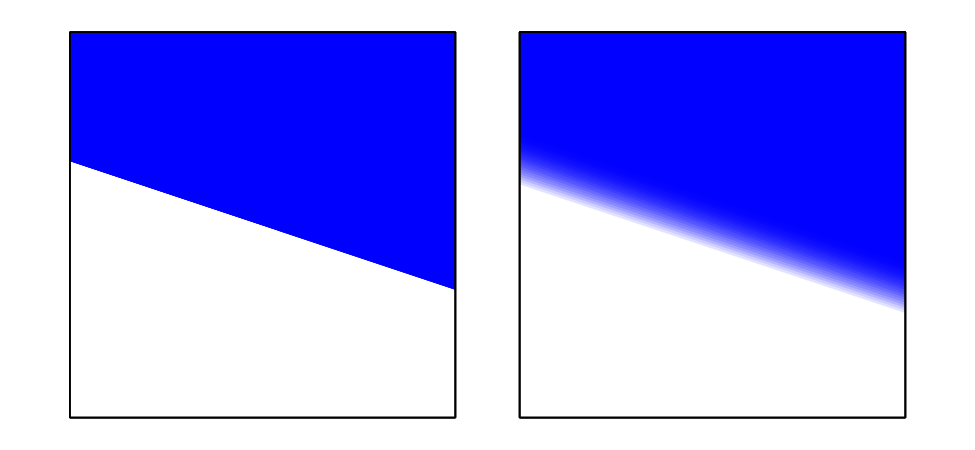

Sharp (left, \(H \left( x, y \right)\)) and smoothed (right, \(\hat{H} \left( x, y \right)\)) interfacial representations.¶

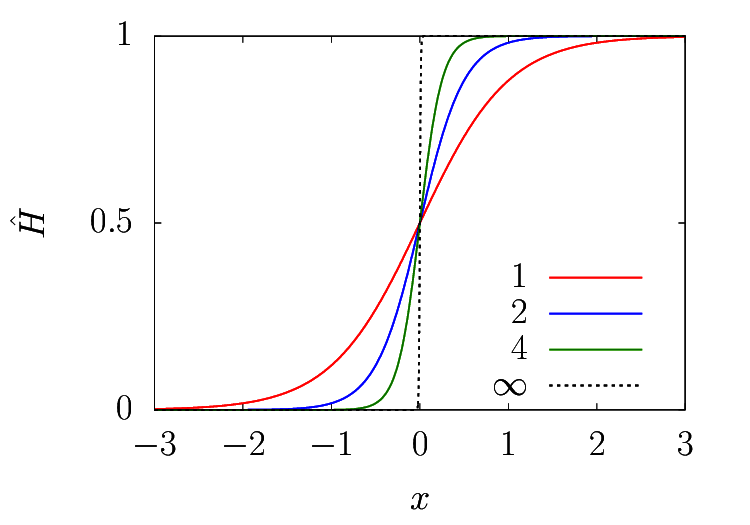

\(d\) is a parameter to be defined here, giving the surface position. \(\beta\) is a sharpness parameter which specifies the thickness, which corresponds to the Cahn number \(Cn\) in the phase-field methods:

Interface sharpness as a function of the control parameter \(\beta\). \(\beta \rightarrow \infty\) leads to \(H\). \(\Delta x = 1\) corresponds to the size of the grid.¶

Note

The larger the \(\beta\) is, the sharper the surface becomes and the more realistic it is. However, there are two drawbacks for large \(\beta\):

Inaccurate surface representation

As discussed below, the numerical integral of \(\hat{H}\) is justified by the fact that \(\hat{H}\) is smooth. Larger \(\beta\) violates this assumption, and higher-order Gaussian quadratures are needed to accurately compute the surface position, which is computationally demanding.

Large spurious currents

THINC schemes tend to suppress the spurious currents well compared to the conventional VOF method, which comes from the diffused nature of the surface. Larger \(\beta\) diminishes this advantage.

Recall that here I want to decide \(d\) to uniquely decide the position of the local surface. Although the above equation has an analytical solution for two-dimensional cases, it contains Eulerian logarithmic integrals, which is computationally expensive. Thanks to the diffused assumption of the surface, however, numerical integral gives a good approximation, namely:

where \(w_i\) and \(X_i\) are the weights and the points of \(N\)-th-order Gaussian quadrature defined in the interval \(\left[ -\frac{1}{2}, +\frac{1}{2} \right]\). Notice that the volume integral is replaced with the summations in all dimensions.

To obtain \(d\), I need to minimise the following function \(f\):

For simplicity, I introduce

here. Also I use an identity:

to yield

Since \(\exp{\left( x \right)}\) is a monotonic function, finding \(d\) is equivalent to finding the corresponding \(D \equiv \exp{\left(-2 \beta d \right)}\):

and its derivative (with respect to \(D\) instead of \(d\)) is

where

Newton-Raphson method¶

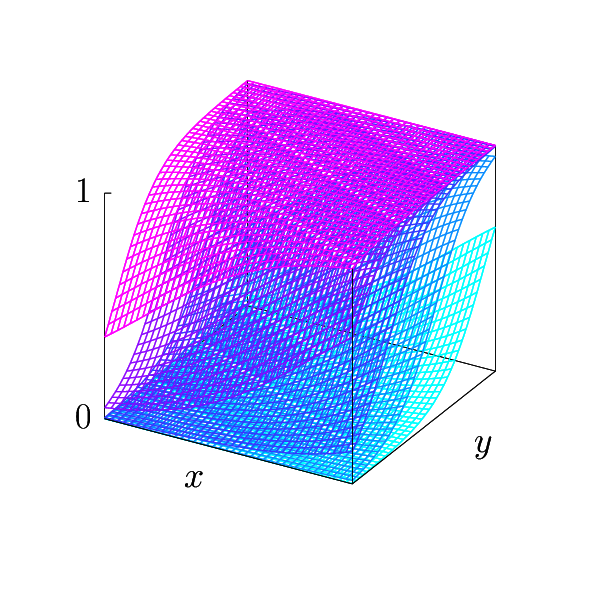

Roughly speaking, to obtain \(d\) (or \(D\)), I move the hyper-surface (see below) and find \(d\), whose (numerical) integral coincides with the given local volume fraction \(\phi\).

Hyper-surfaces \(\hat{H} \left( x, y, d \right)\) as a function of \(d\), while the normal vector is fixed.¶

which is achieved by the Newton-Raphson method following Xie and Xiao, J. Comput. Phys. (349), 2017.

Notice that \(P_{ij} = \exp{\left( -2\beta \psi_{ij} \right)}\) is independent of \(D\) and thus I compute them before starting the iteration:

106double exps[NGAUSS * NGAUSS] = {0.};

107for(int jj = 0; jj < NGAUSS; jj++){

108 for(int ii = 0; ii < NGAUSS; ii++){

109 exps[jj * NGAUSS + ii] = exp(

110 -2. * vofbeta * (

111 + normal[0] * gauss_ps[ii]

112 + normal[1] * gauss_ps[jj]

113 )

114 );

115 }

116}

119double exps[NGAUSS * NGAUSS * NGAUSS] = {0.};

120for(int kk = 0; kk < NGAUSS; kk++){

121 for(int jj = 0; jj < NGAUSS; jj++){

122 for(int ii = 0; ii < NGAUSS; ii++){

123 exps[(kk * NGAUSS + jj) * NGAUSS + ii] = exp(

124 -2. * vofbeta * (

125 + normal[0] * gauss_ps[ii]

126 + normal[1] * gauss_ps[jj]

127 + normal[2] * gauss_ps[kk]

128 )

129 );

130 }

131 }

132}

Also, to start the iteration, I need the initial \(D_0\). As a guess, I take the solution of the first-order Gaussian quadrature:

and

giving

and thus

135double val = 1. / vof - 1.;

Also the function

and its derivative

are computed here:

138 double f0 = -1. * vof;

139 double f1 = 0.;

140#if NDIMS == 2

141 for(int jj = 0; jj < NGAUSS; jj++){

142 for(int ii = 0; ii < NGAUSS; ii++){

143 const double weight = gauss_ws[ii] * gauss_ws[jj];

144 const double p = exps[jj * NGAUSS + ii];

145 const double denom = 1. / (1. + p * val);

146 f0 += weight * denom;

147 f1 -= weight * p * denom * denom;

148 }

149 }

150#else

151 for(int kk = 0; kk < NGAUSS; kk++){

152 for(int jj = 0; jj < NGAUSS; jj++){

153 for(int ii = 0; ii < NGAUSS; ii++){

154 const double weight = gauss_ws[ii] * gauss_ws[jj] * gauss_ws[kk];

155 const double p = exps[(kk * NGAUSS + jj) * NGAUSS + ii];

156 const double denom = 1. / (1. + p * val);

157 f0 += weight * denom;

158 f1 -= weight * p * denom * denom;

159 }

160 }

161 }

162#endif

\(D\) is updated using the computed \(f\) and \(f^{\prime}\):

which is implemented as

164val -= f0 / f1;

This iteration continues until the residual \(| f |\) becomes smaller than the given threshold or until the number of iterations exceeds the specified maximum:

102const int cntmax = 8;

103const double resmax = 1.e-12;

Finally I convert \(D\) to \(d\) following \(D = \exp{\left( -2\beta d \right)} \Leftrightarrow d = -\frac{1}{2\beta} \log{\left( D \right)}\):

170return -0.5 / vofbeta * log(val);

In the next step, I use this uniquely-determined surface to evaluate the flux to integrate the advection equation.